Products & Technologies

Products & Technologies

Services

Resources

Posted

January 31, 2019

Stay updated on our content.

Tackling the Big Questions in Memory

by Ryan Gibson

Jan 31, 2019

As we enter the era of Big Data and Artificial Intelligence (AI), it is amazing to think about the possibilities for a truly seismic shift in the changing requirements for memory solutions. The massive amount of data humans generate every year is astounding and yet is expected to increase five-fold in the next few years from machine-generated data. Further compounding this growth is the emerging 5G mobile network.

As more and more data is generated, we must be able to manage and store it all, and—more importantly—have fast access to it to make real-time decisions. This is creating considerable opportunities in our industry to deliver more efficient memory technologies. These new requirements are bringing concepts like in-memory computing to the forefront of innovation.

I had the honor to moderate the Applied Materials panel session on “Memory Trends for the Next Decade” at the International Electronics Devices Meeting (IEDM) in December. The panel included Scott Gatzemeier, Micron; Jaeduk Lee, Samsung; Renu Raman, SAP; Jung Hoon Lee, SK hynix; and Manish Muthal, Xilinx, who each brought deep knowledge and a unique perspective to the discussion.

We heard from our panelists about the opportunities and challenges to enable the next decade of memory for Big Data and AI technologies. This effort involves figuring out the architectural and device changes required for moving memory closer to the processor. We also need to keep increasing the bandwidth and the density of existing memory devices to accommodate more data.

The need for innovations to advance new memory solutions extends to materials, architectures, 3D structures within the chip, new techniques to shrink geometries and advanced packaging. These are all areas of focus for Applied, as part of a “New Playbook” for semiconductor design and manufacturing.

Our panelists shared with us the challenges of scaling traditional DRAM and NAND devices, and gave us hope that there are innovative methods in development to keep us moving forward for several more years. I’ll highlight a few takeaways from the panel.

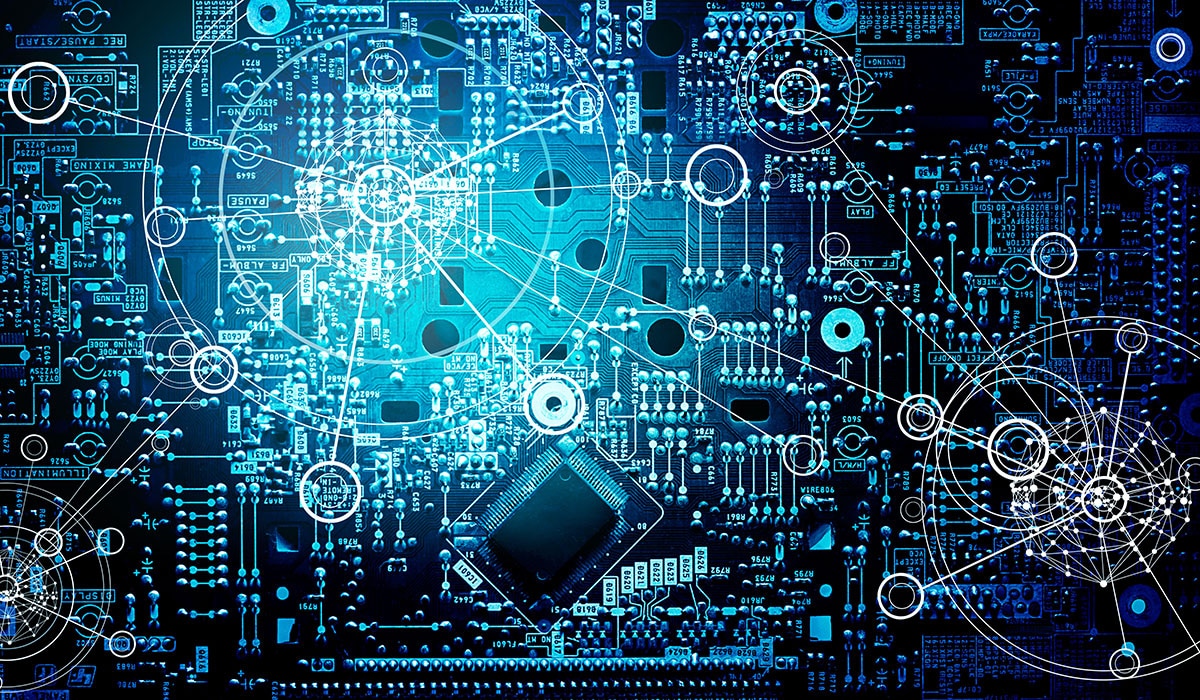

We’ve lived in the world of DRAM and NAND for decades, but today these are becoming specialized and segmented to address graphics memory, DDR, SSDs, etc. Just as 3D NAND scaling continues through stacking of the array and new layout schemes, there will likely be new DRAM architectures to support high-bandwidth memory. This should allow DRAM to continue as a workhorse technology for at least another decade.

Beyond scaling mainstream memory technologies, panelists agreed that entirely new memory architectures are needed to achieve the lower latency and higher speed performance required to bridge the growing gaps in existing capability.

This raises the question: what kind of emerging memories will be needed for high-performance machine learning and other AI applications? Not surprisingly, several new memories unique to AI accelerators are under development by a number of companies. A pragmatic approach suggested for now is to leverage some of these memories and use software to manage them.

The industry can’t afford delays in R&D; it was pointed out how important it is to start working now, especially on the revolutionary scaling path of moving to neuromorphic computing designs. There was mention of R&D activity in new materials and designs for this technology, and some believe in about 10 years the industry may make the transition to a neuromorphic system.

Beyond the memory device, there are many opportunities for improvements in system architecture. The panel explored this topic and how to cost-effectively move processing functions closer to the data, so that we can start scaling performance in a power-efficient manner. As an industry, we need to figure out how to move various computing functions—like compression, encryption, query offloads, machine learning and video transcoding—closer to the memory.

Solving these memory challenges will require multiple approaches: at the device level, at the system architecture level and at the software level. It will likely take a combination of these factors to sustain memory scaling over the next decade. This is an exciting time in our industry with lots of opportunities to play a critical role in one of the biggest technological inflections of our lifetime.

Tags: memory, Big Data, artificial intelligence, 3D NAND, DRAM

Ryan Gibson

Account General Manager

Ryan Gibson is an account general manager at Applied Materials, helping shape the company's product roadmaps to meet the technical requirements of customers. He received his B.S. in electrical engineering from Oregon State University.

Now is the Time for Flat Optics

For many centuries, optical technologies have utilized the same principles and components to bend and manipulate light. Now, another strategy to control light—metasurface optics or flat optics—is moving out of academic labs and heading toward commercial viability.

Seeing a Bright Future for Flat Optics

We are at the beginning of a new technological era for the field of optics. To accelerate the commercialization of Flat Optics, a larger collaborative effort is needed to scale the technology and deliver its full benefits to a wide range of applications.

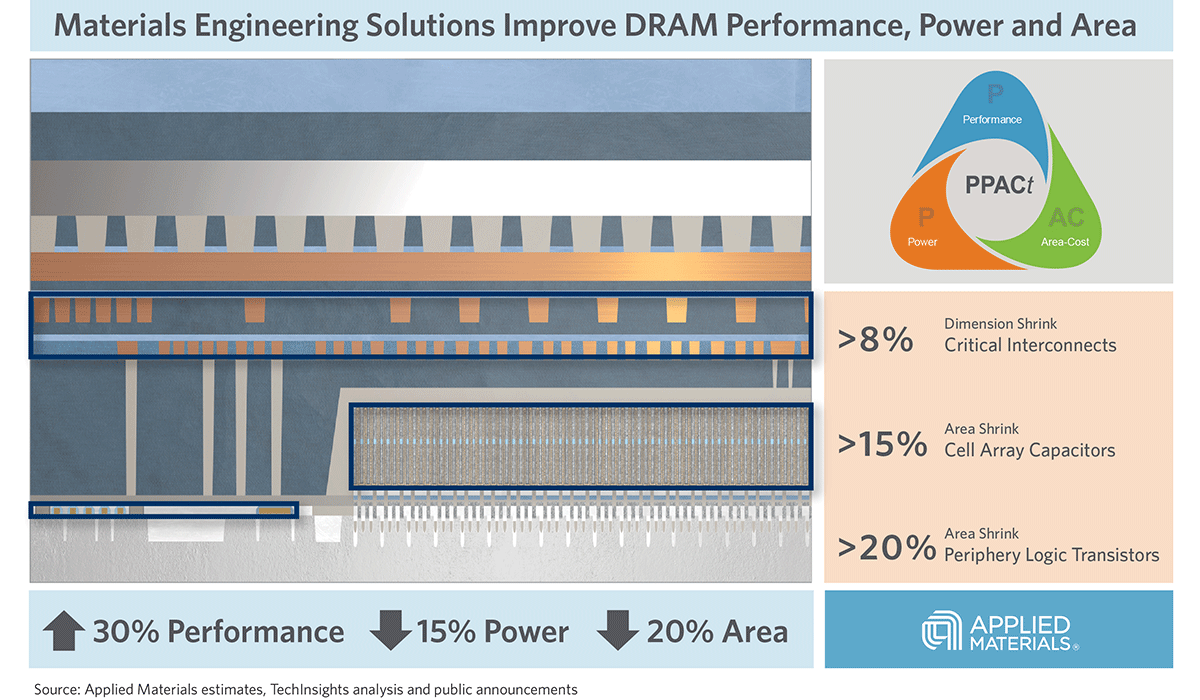

Introducing Breakthroughs in Materials Engineering for DRAM Scaling

To help the industry meet global demand for more affordable, high-performance memory, Applied Materials today introduced solutions that support three levers of DRAM scaling.