Products & Technologies

Products & Technologies

Services

Resources

Posted

November 29, 2018

Stay updated on our content.

Memory Trends for the Next Decade

by Gill Lee

Nov 29, 2018

As emerging big data and artificial intelligence (AI) applications, including machine learning, drive innovations across many industries, the issue of how to advance memory technologies to meet evolving computing requirements presents several challenges for the industry.

The mainstream memory technologies, DRAM and NAND flash, have long been reliable industry workhorses, each optimized for specific purposes — as main memory to process large amounts of data and as nonvolatile memory ideal for data storage, respectively. But, today these technologies are being challenged in many ways by the demands of AI workloads that require direct and faster access to memory.

Among the questions the industry is facing are, what trends will drive the next decade for memory technologies? How is the industry going to scale and evolve the mainstream memories to provide high-performance, high-density storage and greater functionality? What kind of new memories will be needed for high-performance machine learning and other AI applications, and how can memory technology accelerate overall performance?

The industry is evaluating a variety of options for achieving improvements and coming up with different solutions. There is much deliberation over scaling paths, the types of memory needed for future applications and how system architectures will change to facilitate new concepts like in-memory computing.

This changing memory environment will be the focus of a Technical Panel Applied Materials is hosting on December 4 in San Francisco (in conjunction with the International Electron Devices Meeting). The panel will feature distinguished speakers from Micron, Samsung, SAP, SK hynix and Xilinx for a thought-provoking discussion on the questions I have outlined above and related topics.

Certain characteristics of the future memory landscape are already discernable. What is clear is the role of memory will expand in the AI era.

Vertical scaling continues for 3D NAND with more pairs and multi-tier schemes being pursued to increase storage density. With DRAM, geometric lateral scaling continues, but it is slowing and materials innovation will be needed for further scaling as with 3D NAND. There will likely be more types of specific-purpose DRAM for various advanced applications — the diversity of DRAM is something that is often overlooked.

However, even as the mainstream memories are being scaled towards greater performance and functionality, they may still not be sufficient to support AI technologies like high-performance machine learning. These applications require not only a lot more memory, but faster, higher capacity memory solutions. The consensus is new, different types of memory schemes and technologies are needed.

In-memory computing is an emerging concept making headlines as a means of extending and substantially improving memory. This approach imagines some logic function inside the memory so it performs more than just simple memory. Another idea is having computing architectures designed around memory, much as they are currently designed around the microprocessor. Although memory-centric computing is still in the early stages, what is evident is how the traditional borders of memory tasks are becoming blurred.

Packaging is another key enabler for AI, supporting high-bandwidth memory and the heterogeneous integration of logic computing and memory for high-speed access. The question is which of the different memory packaging configurations will emerge as the most optimal approach for cost and performance.

In summary, diverse memory technologies are needed to deliver the performance and functionality gains to enable the next era of computing. Importantly, this effort will require innovations across the ecosystem — from materials to systems. I hope you can join us and attend what promises to be an insightful discussion of this evolving environment by a panel of experts. Here is the link to register.

Tags: memory, AI, machine learning, 3D NAND, DRAM, packaging

Gill Lee

Managing Director, Technical Marketing / Strategic Programs - Semiconductor Products Group

Gill Lee is managing director of technical marketing / strategic programs in the Semiconductor Products Group at Applied Materials. He joined Applied in 2009, having worked previously in memory technology development at Qimonda/Infineon/Siemens in New York, France and Germany. Gill earned his M.S. in materials science and engineering from POSTECH University, South Korea. He has authored a number of papers on CMOS integration and process development and holds several related patents.

Now is the Time for Flat Optics

For many centuries, optical technologies have utilized the same principles and components to bend and manipulate light. Now, another strategy to control light—metasurface optics or flat optics—is moving out of academic labs and heading toward commercial viability.

Seeing a Bright Future for Flat Optics

We are at the beginning of a new technological era for the field of optics. To accelerate the commercialization of Flat Optics, a larger collaborative effort is needed to scale the technology and deliver its full benefits to a wide range of applications.

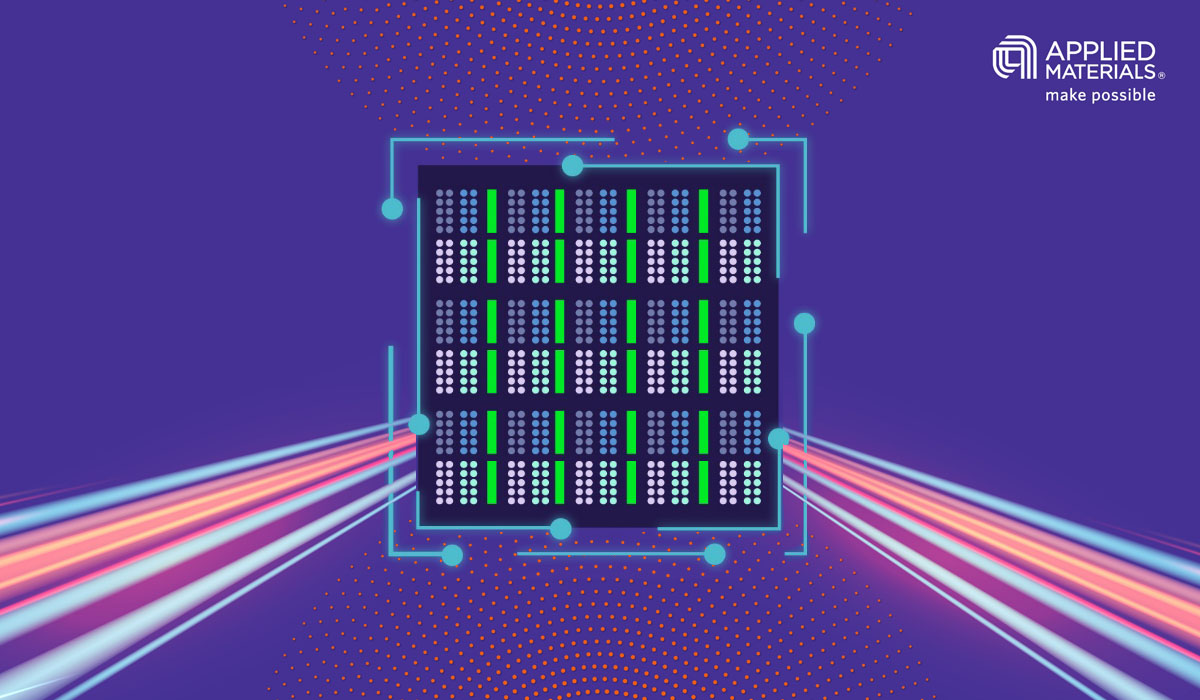

Introducing Breakthroughs in Materials Engineering for DRAM Scaling

To help the industry meet global demand for more affordable, high-performance memory, Applied Materials today introduced solutions that support three levers of DRAM scaling.