Products & Technologies

Products & Technologies

Services

Resources

Posted

January 25, 2019

Stay updated on our content.

AI is Changing the Way the Industry Thinks

Jan 25, 2019

At SEMI’s recent Industry Strategy Symposium, I had the honor of participating on an Artificial Intelligence (AI) panel discussing the challenge of “Filling the Gap between Smart Speakers and Autonomous Vehicles.” Joining me were Dr. Federico Faggin, the inventor credited with designing the world’s first microprocessor while at Intel (the 4004); Dr. Michael (Mike) Mayberry, chief technology officer at Intel Corporation; and Dr. Terry Brewer, founder, president and CEO of Brewer Science. Dan Hutcheson, CEO of VLSIresearch, was the moderator. Following are some of the key points I took away from the discussion.

The emerging AI and Big Data era promises to be the biggest computing wave yet, becoming pervasive across industries, from healthcare to transportation. Enabling this future will require technology breakthroughs throughout the industry ecosystem, from materials to systems. At the center of making AI possible is the development of better neural processors. But to achieve this, we need a deeper understanding of how intelligence works in humans.

The panel began with a discussion on the progression of CPU performance, from the Intel 4004 to the latest chips that deliver Zettaflops of performance. But this is just the beginning of creating the cost-effective and power-efficient solutions needed for AI workloads. Now that we are trying to emulate the brain, the requirements and challenges to overcome are enormous.

AI is still based on neural network technology, which in turn, is based on a simulation in a computer which obeys a mathematical model that tries to capture what our brain does. But this is two steps removed from the actual operation that happens in the brain. Without a certain set of data from which the neural network can learn patterns and correlations of that data, the neural network can only go so far.

While the industry strives to keep innovating, what we really need to enable AI is a disruption of the continuous improvement mindset the industry has been operating on for a very, very long time. The industry needs a disrupter, and AI can serve as that disrupter.

An example discussed on the panel was the industry’s capability to generate about 30,000 data points per second, an increase that enables a leap in analysis comparable to the progression from standard displays to ultra-high-definition displays that also enables us to see things that could not be seen before. This can be disruptive.

While AI is capturing the world’s attention today, the term was actually coined decades ago, in 1956, and had a surge in popularity in the 1970s and 1980s—including rudimentary self-driving cars. Since then, the rapid pace of continuous improvement has turned many AI concepts into practical things that work fairly well today. Digital voice assistants, for example: the concept was around 40 years ago, but the computing wasn’t sufficient to implement it.

What triggered the new rise of AI is a massive increase in data that enables truly useful models. Having the internet and labeled data, it was pointed out, allows us to build more complex models, in turn driving the need for even faster hardware and better statistical algorithms.

Just as demand for power-efficient computing power is increasing with the benefits of AI, the mechanism for achieving it—Moore’s Law—has stalled over the past seven to eight years. Now, doubling the available transistors requires five or six years, and we no longer get the 10 to 15 percent power improvement per year as we did before. This slowing has severe consequences for the future of AI. To reliably increase computing performance and energy efficiency each year we need fundamental changes in chip design and architecture.

It’s time to question long-held assumptions, up and down the AI stack. At the top of the pyramid, computing for most of the past 60 years has been performed on a traditional CPU. Lately, many applications have transitioned to using graphics processors—in an evolutionary path to improving AI—because they’re faster at doing matrix math. The next step is taking advantage of more purpose-built processors and computers to deliver more energy-efficient AI computation. With even greater understanding, instead of implementing a simulation of brain-inspired neural networks, the industry should consider implementing actual neural networks.

At the fabrication level, the industry is still building chips using essentially the same methods employed for decades. Here, too, fundamental assumptions must be challenged: we need to rethink depositing, modifying and removing materials as discrete functions performed in discrete tools. To continue making progress and improving the capabilities of advanced chips fabricated at atomic scale, we need breakthroughs in materials engineering. We are already seeing some of this come to fruition with novel methods like Applied’s Integrated Materials Solutions.

Where do we go from here? The panel raised many interesting questions and outlined many new challenges. The conclusion I came away with is that the industry recognizes the need for new breakthroughs and is working hard to deliver innovations for AI applications.

Tags: artificial intelligence, AI, neural networks, Moore’s Law, Integrated Materials Solutions

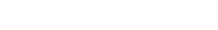

Now is the Time for Flat Optics

For many centuries, optical technologies have utilized the same principles and components to bend and manipulate light. Now, another strategy to control light—metasurface optics or flat optics—is moving out of academic labs and heading toward commercial viability.

Seeing a Bright Future for Flat Optics

We are at the beginning of a new technological era for the field of optics. To accelerate the commercialization of Flat Optics, a larger collaborative effort is needed to scale the technology and deliver its full benefits to a wide range of applications.

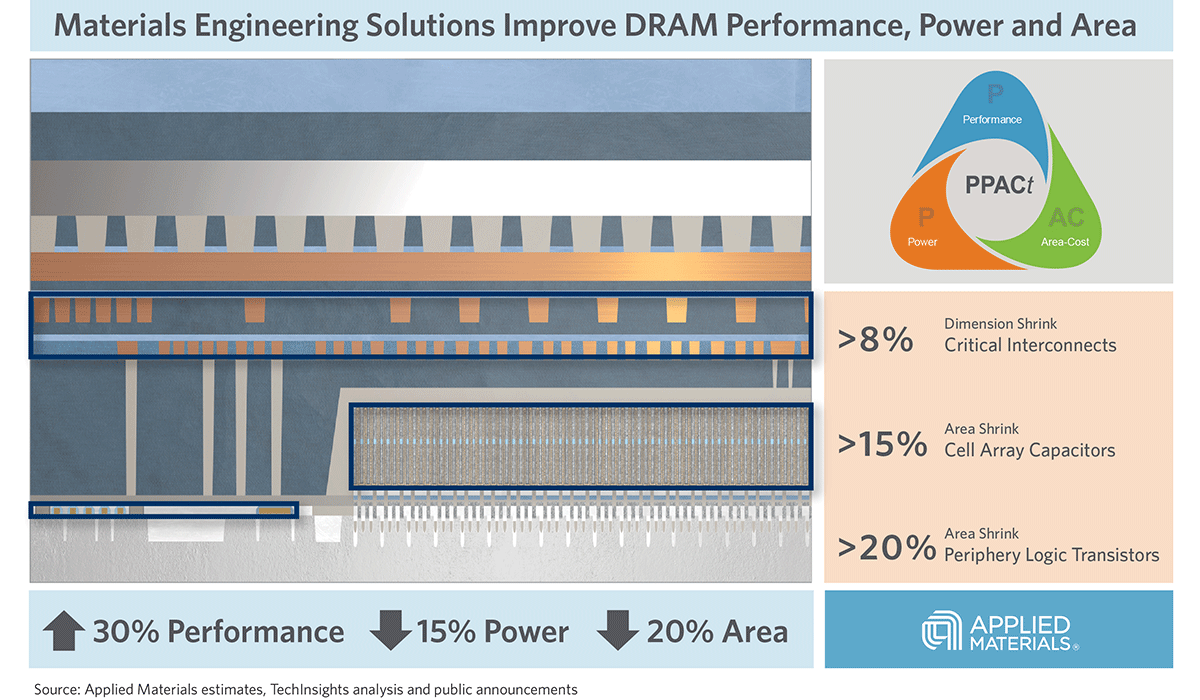

Introducing Breakthroughs in Materials Engineering for DRAM Scaling

To help the industry meet global demand for more affordable, high-performance memory, Applied Materials today introduced solutions that support three levers of DRAM scaling.