Products & Technologies

Products & Technologies

Services

Resources

Posted

October 23, 2018

Stay updated on our content.

VC Opportunities in the Rapidly Growing AI Developer Ecosystem

Oct 23, 2018

In this blog post, I’ll highlight my takeaways from the recent AI Hardware Summit where I participated as a panelist. The conference’s focus on developing hardware accelerators for neural networks and computer vision attracted companies from across the ecosystem – AI chip startups, semiconductor companies, system vendors/OEMs, data center providers, financial services companies and VCs, which is where I fit in as an Investment Principal at Applied Ventures (the VC arm of Applied Materials). While AI is still coming of age, it was clear from the conference that the AI developer community is rapidly growing, offering a wide range of investment opportunities.

For context, it’s important to note what kicked off the current race to build AI accelerator hardware. First, neural networks or even deep learning are not new discoveries and the algorithms in use today are similar to what was available during the 1980s and ‘90s. The hardware industry at large did not move towards AI-specialized products until relatively recently.

The signal that this situation was changing is the dramatic increase in investments and corporate acquisitions. For example, Intel’s acquisitions of Nervana and Movidius in 2016, followed by a series of announcements related to Google’s Tensor Processing Unit™ integrated circuit (TPU) v1 in 2017. Although many startup companies have addressed this space for years, investment from VCs and corporations spiked significantly in the last two years compared to the previous 10 years.

Discussions at the conference around creating a roadmap for AI chip development included questions on the role and continued viability of Moore's Law scaling. Opinions ranged from “Moore’s Law is far from dead,” to “Moore’s Law is definitely dead” — a viewpoint mostly held by startups at the conference. Regardless of which side of the fence you’re on, many see disruptive chip startups as playing a key role in delivering faster, more efficient chips for AI workloads.

To provide a better understanding of the emerging AI landscape from a VC perspective, I’ll summarize key changes taking place along with issues being addressed, and share my viewpoints from the AI trends panel I participated on:

Why companies are planning to design and build their own AI chips

Up to now, general purpose processors like data center CPUs have required most users to optimize their software for a single industry-standardized hardware architecture (ISA). Workloads related to AI, especially for deep learning training, are so compute- intensive that hyperscale users have begun to build their own frameworks to use more streamlined chips like GPUs and specialized purpose-built ICs like the TPU. It’s an open question as to whether there will be a new ISA related to AI, or if there will continue to be a “roll your own” approach that we are seeing in the largest users of data center infrastructure.

Some key problems hardware startup companies are trying to address

While the focus of the conference was AI hardware, inefficiencies rooted in both software (such as statistical inefficiency) and hardware (system, architecture and device) need attention.

The number one issue is training inefficiency; specifically, the time it takes to discover and refine a machine learning model that can be deployed to make useful decisions (inferences). In a keynote, it was noted that some models can take up to 22 days to train. The effect of training inefficiency limits the overall usefulness of AI and hinders the progress of the entire IT industry. Such time limitations prevent more interesting questions from being asked using machine learning. But the root causes of long training cycle times are not one-dimensional, and require as much improvement in statistical and algorithmic frameworks as the architecture itself, a daunting challenge for a chip startup.

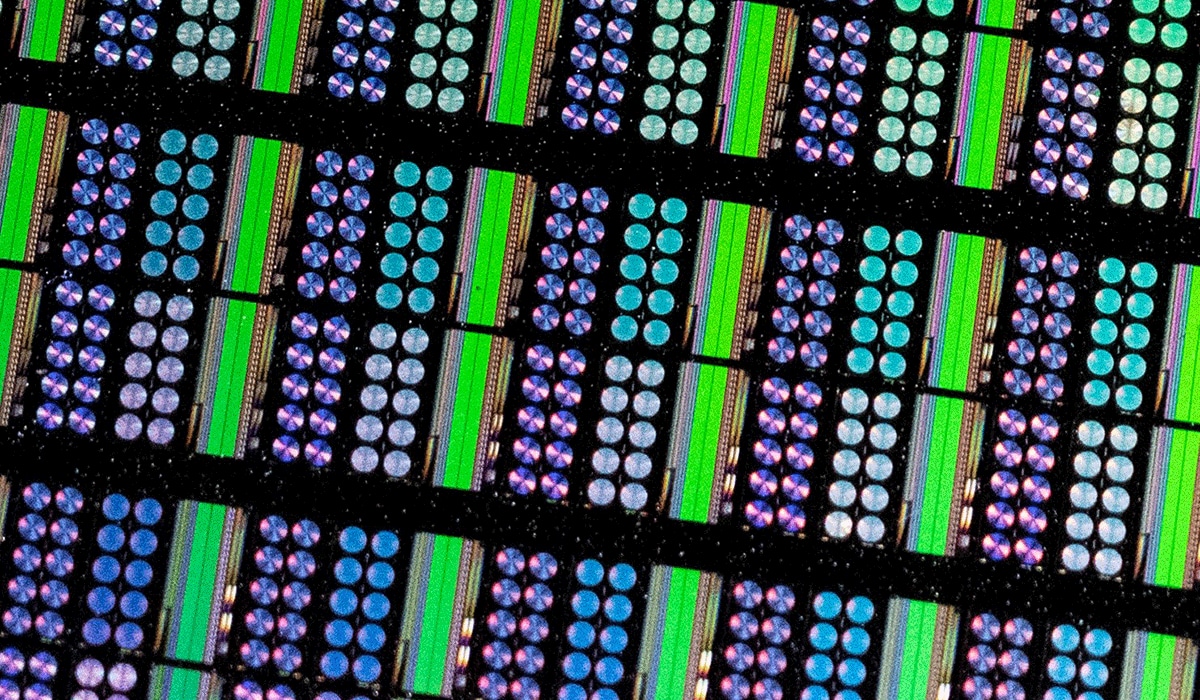

On the other hand, using the trained models – machine learning in the datacenter – is where many startups see the best near-term opportunity. Because a key figure of merit for inference is the latency, or time needed to respond to a request, startups are looking to eliminate time and energy delays taken up by accessing external DRAM by bringing the memory cells closer to the execution cores of the processor. In some cases, the memory is moved from an external bus onto the package (a System-in-Package or SiP); in more extreme cases, the memory is moved onto the die itself by maximizing the amount of SRAM possible in the chip area.

Chances for startup companies in a hugely capital intensive industry

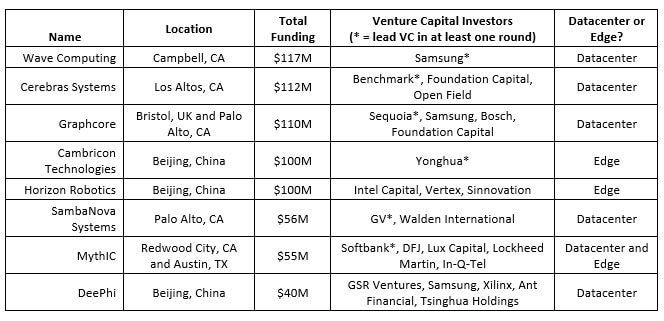

Startups (when viewed en masse) are exceptionally good at market discovery. They may be the first companies to find promising areas of opportunity for accelerators and ASICs to grow their markets. This is borne out by the amount of funding for AI hardware startups, which has climbed substantially since 2015. More than a handful of companies have raised funds in excess of $100 million, some with valuations in the high hundreds of millions (Table 1). All of the leading companies are fabless and have made progress towards demonstrating silicon performance, while using relationships with strategic partners or foundries to contain development costs. Although some claim to have launched products, the volumes are too small to make an impact on the AI chip market for 2018; it looks like 2019 is the year many companies plan to ship products.

Table from Jerry Yang @ Hardware Club (as of mid-2018)

Viewpoints from the AI Trends Panel

As a panel of investment representatives, we’re aware it’s early days in the AI accelerator game.

AI trends panel at the AI Hardware Summit

For hyperscale cloud services, there is interest in companies with designs that increase efficiency for large workloads and which offer measurable impact on savings in energy or time. Estimates of how much of the data center processor market is AI-specific hardware are in the range of a few percent of the total server CPU market. Such chips, especially GPUs, are in high demand relative to supply, leading to a large value surplus for makers of those products. There is opportunistic interest from startups to take some of that surplus, but also from the cloud providers themselves, to diversify their vendor base.

Another viewpoint is that a strong team and proven ability to operate and scale must be part of the criteria for success. Financial VC firms have generally not been as active in funding chip startups, but may fund specific high-growth, high-return opportunities as they arise. This may be rationalized in a vertical strategy that differs from a thesis based on AI hardware.

My view is that changes in architecture and frameworks that take advantage of the large inefficiencies of general purpose processor ISAs will happen near-term to capture market share. It’s not uncommon to hear claims of 10 to 1,000x gains in throughput or Ops/W for specific workloads, so we will be able to see the proof of this soon. The first moves the startups make will likely be to take up this slack, which can potentially deliver large gains without the need to pursue riskier or more expensive leading-edge process technology.

Applied Ventures’ view of the opportunities

For the Applied Ventures strategic investment fund, the best AI-related investments are ones Applied Materials can potentially access through technical or commercial collaboration, or both. The potential to tie in to Applied’s leadership in materials engineering technology and provide that to a small-scale startup is the advantage that we can bring into deals. While we are looking for companies that address significant industry challenges, such as increased throughput or efficiency when running AI frameworks and models, we particularly want to find those cases where the involvement of Applied can have the most direct impact.

While there is a trend to attach AI or machine learning to more conventional business models to attract funding, we are primarily interested in the startups that focus on the underlying technology. Generally, startups using new architectures built on commodity technology, or software layers implemented in FPGAs, vastly outnumber the ones that think beyond this phase. Finally, we’re interested in some of the nearer term disruptions related to process technology that include new materials-enabled memories for embedded applications, as well as lower latency memory for online training and inference operations.

Have an idea or concept you’d like to discuss with us? If so, you can submit your proposal here.

Google Tensor Processing Unit is a trademark of Google LLC.

Tags: artificial intelligence, AI, machine learning, venture capital, Semiconductors

Michael Stewart

Now is the Time for Flat Optics

For many centuries, optical technologies have utilized the same principles and components to bend and manipulate light. Now, another strategy to control light—metasurface optics or flat optics—is moving out of academic labs and heading toward commercial viability.

Seeing a Bright Future for Flat Optics

We are at the beginning of a new technological era for the field of optics. To accelerate the commercialization of Flat Optics, a larger collaborative effort is needed to scale the technology and deliver its full benefits to a wide range of applications.

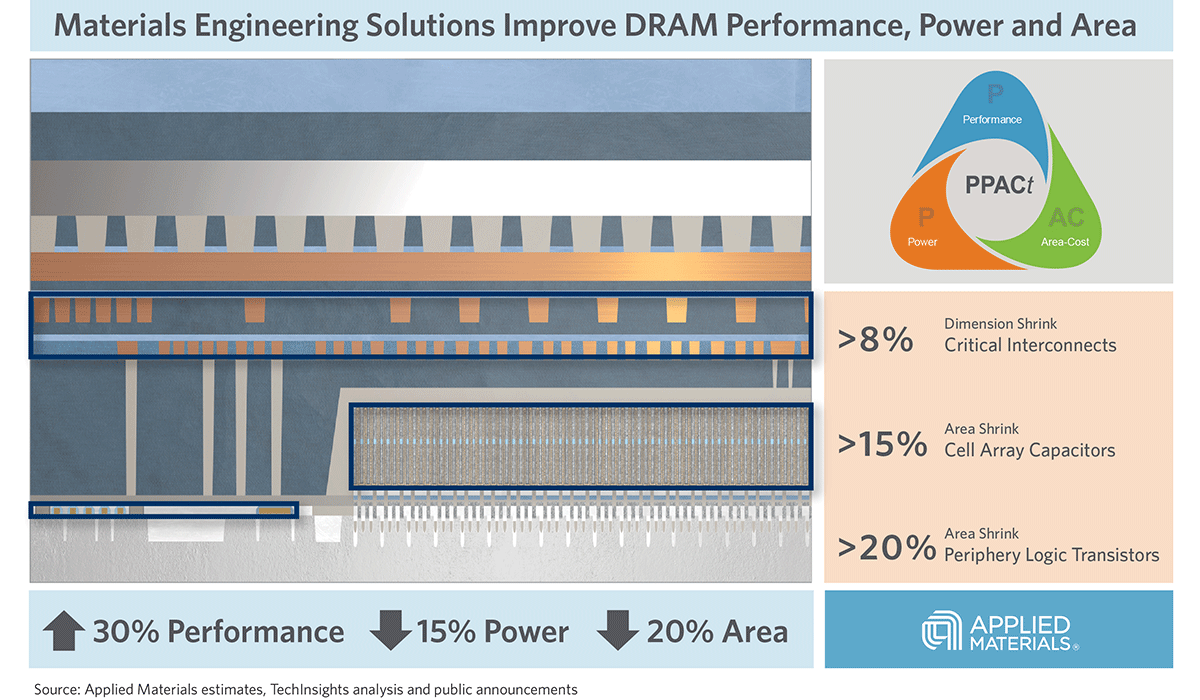

Introducing Breakthroughs in Materials Engineering for DRAM Scaling

To help the industry meet global demand for more affordable, high-performance memory, Applied Materials today introduced solutions that support three levers of DRAM scaling.