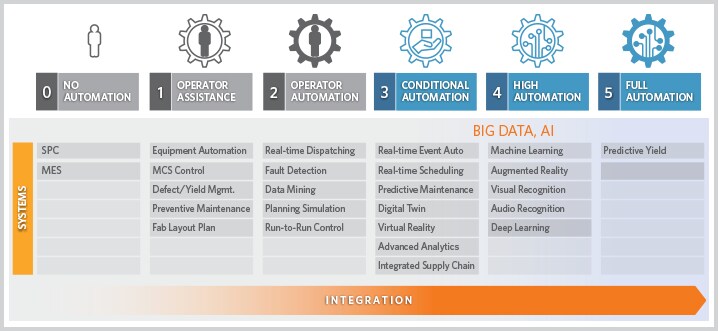

Figure 3. The evolution of performance tracking. Each of the four segments will become dashboard-driven with existing and new KPIs. (RR ILM is the Applied SmartFactory Rapid Response platform solution.)

The user must first identify all the known information regarding the samples that have violated limits. These include the path through the various processes, the recipes, the product, and the resulting variation or shifts on the wafer.

Then, the user must make several quick assessments to begin the troubleshooting process. The first is to understand the severity of the failure, and the process equipment and wafers impacted by the condition. Depending on the severity, the process can be halted by recipe, or by chamber and equipment. Next, the user must evaluate the validity of the measurement, and ensure that the right number of raw data points are available from a process that has historically been reliable and repeatable.

Finally, the user must determine whether the failure is coming from a prior process or an equipment drift or both.

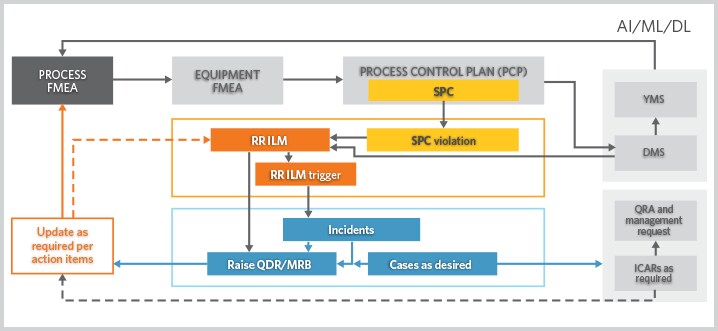

Outlining the variables to these questions enables us to quickly create an automation roadmap. First, a process FMEA can be built into the behavior of the system to allow us to isolate the incoming failures. Then, an equipment FMEA can be built into the system. An analysis of variance (ANOVA) can then be developed to determine which is contributing more to process variation.

Measurements will be made wafer-to-wafer and within-wafer, and most important of all, will include site-level measurements from wafer to wafer. The process FMEA will ultimately sort the variation sources against the fleet and consider tool and chamber matching as a set of variables. It will convert everything into a go/no-go action when a knob is not available to tune a variance.

Closing the Loop

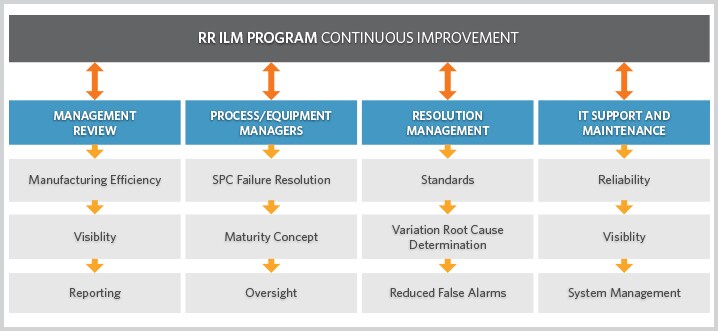

As previously mentioned, a learning system must be built that will ultimately update our understanding of the process- and equipment FMEAs and evaluate the effectiveness of the decisions. One key metric will come from the fab’s yield management system (YMS), while another key metric will come from a new set of KPIs centered around within–wafer variance, which will accompany the already existing KPIs.

These new KPIs are typically not negotiated with customers, and each manufacturing step will have a new set that becomes available with AI systems. The standard-quality KPIs will persist, but they will be augmented by new ones such as repeat violation rates, time to decision, quality of decision and others. All process- and equipment learning will update the FMEA built into the AI system.

Such changes have tremendous implications for fabs. Consider that a fab with 20,000 wafer starts per month (WSPM) typically experiences more than 2,500 events per month. Out-of-spec (OOS) events typically take between 25 to 40 minutes each to troubleshoot, and most of these OOS conditions become scrap when a rework is not possible. Out-of-control (OOC) events also impact processes depending on a fab’s practices. And OOC events frequently become OOS events if not kept in check.

The AI system will provide a speed-of-use component that will reduce OOS resolution time to under four minutes when an operator needs to confirm the results recommended by the system, and will reduce it even further under a full-automation scenario. This will constitute a major departure from current standards. Faster and higher-quality decisions translate into fewer repeat violations, further reducing interruptions.

In such a fab, the improvements offered by AI-based SPC systems are in the speed and quality of decisions. These improvements may translate into more than 600 hours of process time recovered per month for production, along with a further reduction of scrap of approximately 10% from current rates.

Within-wafer variation reduction may also translate into yield gains at advanced nodes, though this has yet to be quantified. The same line of reasoning can be employed to other production process anomalies such as fault detection and electrical testing.

Conclusion

Developing AI-based next-generation quality systems will enable customers to make higher-quality decisions in a shorter amount of time by either fully automating decision-making or augmenting human decision-making.

Successfully implementing these systems will require deep industry experience, a creative and innovative mindset, and a strong technological foundation, such as Applied’s expansive SmartFactory portfolio of integrated capabilities. At its core, this portfolio utilizes industry-leading, best-of-breed technologies combined with industry-standard resources, collaborative design and methodical research to help customers propel quality to new levels.